Artificial intelligence could have a profound impact on learning, but it also raises key questions.

Artificial intelligence (AI) and machine learning are no longer fantastical prospects seen only in science fiction. Products like Amazon Echo and Siri have brought AI into many homes, and experts say it’s only a matter of time before the technology has a profound impact in education, as well.

Already, there are interactive tutors and adaptive learning programs that use AI to personalize instruction for students, and AI is also helping to simplify some administrative tasks. But Kelly Calhoun Williams, an education analyst for the technology research firm Gartner Inc., cautions there is a clear gap between the promise of AI and the reality of AI.

“That’s to be expected, given the complexity of the technology,” she said.

Implications for Learning

Artificial intelligence is a broad term used to describe any technology that emulates human intelligence, such as by understanding complex information, drawing its own conclusions and engaging in natural dialog with people.

Machine learning is a subset of AI in which the software can learn or adapt like a human can. Essentially, it analyzes huge amounts of data and looks for patterns in order to classify information or make predictions. The addition of a feedback loop allows the software to “learn” as it goes by modifying its approach based on whether the conclusions it draws are right or wrong.

AI can process far more information than a human can, and it can perform tasks much faster and with more accuracy. Some curriculum software developers have begun harnessing these capabilities to create programs that can adapt to each student’s unique circumstances.

For instance, a Seattle-based nonprofit company called Enlearn has developed an adaptive learning platform that uses machine learning technology to create highly individualized learning paths that can accelerate learning for every student.

Enlearn says its software breaks down the learning process to a whole new level of granularity, analyzing dozens of discrete yet interwoven thought processes that underlie each skill to uncover any misconceptions that might stand in the way of a student’s learning. The platform then uses this information to coach students up in areas where they’re having trouble before proceeding with a lesson.

The software is based on technology developed by the Center for Game Science at the University of Washington. The technology has been shown to improve student mastery of seventh grade algebra by an average of 93 percent after only 1.5 hours of use — even by students as young as elementary school.

“AI can enable a much higher level of personalization,” said Zoran Popovic, director of the Center for Game Science and founder of Enlearn. “It can help deliver just the right curriculum that a student needs at a particular moment. That’s what we’re working toward with Enlearn.”

Improving Student Safety

AI also has implications beyond the classroom. For instance, GoGuardian, a Los Angeles company, uses machine learning technology to improve the accuracy of its cloud-based Internet filtering and monitoring software for Chromebooks.

URL-based filtering can be problematic, GoGuardian said, because no system can keep up with the ever-changing nature of the Web. Instead of blocking students’ access to questionable material based on a website’s address or domain name, GoGuardian’s software uses AI to analyze the actual content of a page in real time to determine whether it’s appropriate for students.

Developers have fed the program hundreds of thousands of examples of websites that the company deems appropriate — or not appropriate — for different age levels, and the software has learned how to distinguish between these. What’s more, the program is continually improving as it receives feedback from users. When administrators indicate that a web page was flagged correctly or blocked when it shouldn’t have been, the software learns from this incident and becomes smarter over time.

Like many Internet-safety solutions, GoGuardian also sends automated alerts to a designated administrator whenever students perform a questionable web search or type problematic content into an online form or Google Doc. This kind of real-time behavioral monitoring can prevent students from harming themselves or others: If an administrator sees that a student is searching for information about how to kill herself, for example, he can follow up to get that student the help she needs immediately, before it’s too late.

Traditional keyword flagging generates many false positives, because the software can’t determine the context or the student’s intent. But with AI, these real-time alerts become much more accurate, users of the technology say.

Shad McGaha, chief technology officer for the Wichita Falls Independent School District in Texas, said he used to receive upward of 100 email alerts per day. “They would fill up my inbox with false positives,” he said, and he would have to spend valuable time combing through these alerts to make sure students weren’t in danger.

Since GoGuardian began using AI to power its software, he now receives only a handful of notifications — and all but 2 percent or 3 percent of these result in actionable insights.

“A few months back, we had a student who was flagged for self-harm. We were able to step in and stop that from happening,” he said. “That’s pretty incredible.”

Key Considerations

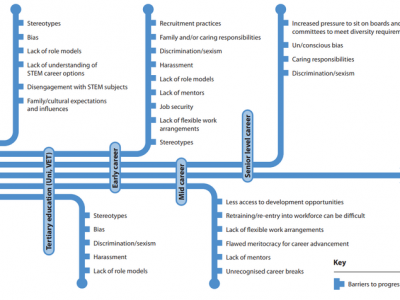

Despite its potential, AI has limitations, as well. “AI works best when it has a vast number of samples to learn from,” Popovic said. It can be challenging to get a large enough sample size for the technology to be effective in a high-stakes environment like education, he explained — where teachers can’t afford to make mistakes with students.

For AI to be effective, the data it uses to reach its conclusions must be sound. “If data that are not accurate are thrown into the mix, it will return inaccurate results,” Calhoun Williams said. There is no such thing as unbiased data, she noted — and bias can be amplified by the iterative nature of some algorithms.

The technology also raises serious privacy concerns. It requires an increased focus not only on data quality and accuracy, but also on the responsible stewardship of this information. “School leaders need to get ready for AI from a policy standpoint,” Calhoun Williams said. For instance: What steps will administrators take to secure student data and ensure the privacy of this information? “If necessary, over-communicate about what you’re doing with data and how this will benefit students,” she advised.

Some adaptive learning products don’t use true machine learning technology, but rather a form of branching technology where the software chooses one of several routes based on how students respond to the content.

“Educators have to be careful of companies claiming to use AI in their software,” she warned. “Some vendors are latching on to the AI bandwagon, but make sure you know what they mean when they make this claim.”

Some questions to ask of vendors include …

- What does AI mean to you? How does this product fulfill that definition?

- How is your product superior to current options with no AI?

- Once I install your product, how will its performance improve through AI? How should I expect to devote staff time to such improvements?

- What data and computing requirements will I need to build the models for this solution?

- How can I see what will happen to the data used by your software?

Another consideration is: What is the scope of the learning environment that can be varied? Presenting the same material in a different sequence has little impact on student outcomes, Popovic explained: “If all I can do with AI is send you to chapter 7 of the material, that’s not enough to make a difference in learning. But if the software were to generate new content on the fly based on how students are responding, that would be a game changer.”